This may be a revelation to some… there is nothing new in the toolsets of continuous improvement (TPS, OpEx, Lean, Six Sigma, Agile or whatever you want to call it). The newest tools have their derivation at least two decades prior to anyone giving the current labels. There are three really valuable aspects of current approaches:

- A logical roadmap for the application of the tools

- All of the really bright people out there trying to advance the practice of change management

- Organizations that understand Constancy of Purpose, at least for a few years at a time. I will tell you some stories about the current practitioners. First I want to ground us in the real pioneers that led us to this point. I am certain that each of the people I will mention here had a similar group of mentors. My journey began in the early 70’s and I can only tell the story from there.

I believe I am putting these in chronological order of their impact on my development. My personal giants and why:

Dr. Lee Weaver – Lee is probably the best professor I ever met and he instilled in me a passion for statistics, specifically applied to Quality and Reliability. Lee also worked for Honeywell and so, from the beginning, I was taught the practical side of what were typically very theoretical subjects.

Bill Mitchell – A Scottish gentleman (and I mean that in the finest sense of the word) who NCR had the foresight to make my boss very early in my career. Bill was the first boss I knew who believed his job was to coach and mentor. He taught me that all these decisions we make with increasingly complex toolsets had to be, first and foremost, sound business decisions. He also taught me about truly supporting your people. He said to me the day I went to work for him –

“I will always support you publicly no matter what you do. If I believe you have done something wrong, I will call you behind closed doors and discuss it with you. I will listen to your perspective; you will listen to mine and we will decide what to do. We will both own the decision and never walk out of my office saying that we are doing anything other than what we think is right. Anything else will weaken both of us.”

I can tell you that Bill was the best boss and mentor I ever had and he lived up to his words. I have honestly tried to structure the same relationship with all of my bosses and with all that I have influenced since that day. I can tell you that Bill’s methods caused him considerable pain because I certainly tested the limits. I can also tell you that I have experienced considerable pain because I support people in the same way. Nonetheless, I grew and learned under Bill’s wing and I have seen hundreds of people really blossom and grow when allowed to have freedom to do their job. I wouldn’t have it any other way.

Bill also gave me the job of defining the Quality System at a point in time when I did not have a clue what that meant. It was the greatest gift I ever received because now I understand that all of the wonderful “silver bullets” that are sold today have to logically fit into a system or they have no long-term value. Remember that the “silver bullets” are not the system. Also remember that being judged to have an adequate system by ISO or a customer doesn’t mean you actually have a good system – what you do has to make sense and flow.

Joseph Juran – I was first exposed to Juran in two ways. I inherited a copy of his Quality Control Handbook early in my career and had the opportunity to lead a group going through “Juran on Quality Improvement”, a videotape series meant to teach people a structured approach to problem solving. I got a copy of Juran’s Managerial Breakthrough as part of that. That book is the basis of what is now known as Lean and Six Sigma. I learned two significant lessons from the book: 1) all change happens project by project and 2) breakthrough has to be approached by first taking the time to understand the underlying process (the ‘journey from symptom to cause’ to quote Juran) before ever trying to solve the problem. Juran’s thoughts on the Quality System expressed in his Trilogy are right. I can still pass ASQ’s CQE, CRE, or CSSBB exam with Juran’s Handbook as my only reference. I had the chance to meet him twice – he was gracious and generous with his time on both occasions.

Joseph Juran – I was first exposed to Juran in two ways. I inherited a copy of his Quality Control Handbook early in my career and had the opportunity to lead a group going through “Juran on Quality Improvement”, a videotape series meant to teach people a structured approach to problem solving. I got a copy of Juran’s Managerial Breakthrough as part of that. That book is the basis of what is now known as Lean and Six Sigma. I learned two significant lessons from the book: 1) all change happens project by project and 2) breakthrough has to be approached by first taking the time to understand the underlying process (the ‘journey from symptom to cause’ to quote Juran) before ever trying to solve the problem. Juran’s thoughts on the Quality System expressed in his Trilogy are right. I can still pass ASQ’s CQE, CRE, or CSSBB exam with Juran’s Handbook as my only reference. I had the chance to meet him twice – he was gracious and generous with his time on both occasions.

Bob Galvin – Bob is the son of the founder of Motorola and was CEO of Motorola when I joined them in 1983. Bob brought participative management (PMP) to Motorola a few years before I joined and began a serious push toward improving quality the year before I joined. I will tell you that Motorola was the most exciting place I ever worked and that for the eight years I worked there, I learned something new every day. I attribute to Bob the opportunity to learn and be excited about my job. Participative Management made us understand differences in people and to respect everyone in the organization. The push for improved quality forced us to find useable tools. It was not acceptable NOT to have real improvement on a daily, weekly, monthly basis. It WAS acceptable to try new things, but fail, at Motorola. We were just expected to learn from these failures!

Bob also introduced Cycle Time Reduction (what we called Lean before Womack) in 1985 and, although it is not publicized, time was the real catalyst that made all of the quality improvement tools real. Go look at the corporate metrics from 1986 forward and you will find that time reduction was right there, equal to defect reduction. In simple terms, we found we could reduce defects without impacting the basic flow (read that as real cost), but we could not truly impact flow without addressing defects. So by linking time and defects, we found the defect reduction tools useful and also discovered their impact to the bottom line.

Bob changed the measurement system, which gave people who truly believed in this an umbrella under which to operate with complete freedom. I believe Bob will be recognized over time as the greatest corporate champion of all time (sorry Jack Welch, but I knew someone greater than you). Bob is also the model for training the workforce. Motorola had required a minimum of 40 hours per employee per year (that is all employees, not just some). Several of my eight years, I had more than 300 hours.

W. Edwards Deming– The only thing I want to tell you is that Deming was right and if you do not understand that – go read Deming. If your only takeaway is to embrace his 14 points in how you behave as a leader, your organization will improve dramatically. I have read everything Deming ever published and I think the best was Quality, Productivity, and the Competitive Position because it was pure Deming – no editing. Remember that he did not say, “Drive out training and institute fear;” he said just the opposite. Also, remember that he did not say NOT to set goals, he said don’t set goals without also providing methods and tools to achieve them.

One of his fourteen points is the most critical – Create constancy of purpose for improving products and services. TPS is probably the best long term example of this. Most companies fail in the long term whether they call it TPS, Lean, Agile, Six Sigma, … because they get new leadership who lose the Constancy – it never works out well.

I had the chance to meet Deming several times at Motorola’s expense and even had dinner with him several times. He was also gracious and generous but not the least bit impressed by labels, especially Six Sigma and Lean. He wanted to know what I was actually doing and was impressed by the work at Motorola.

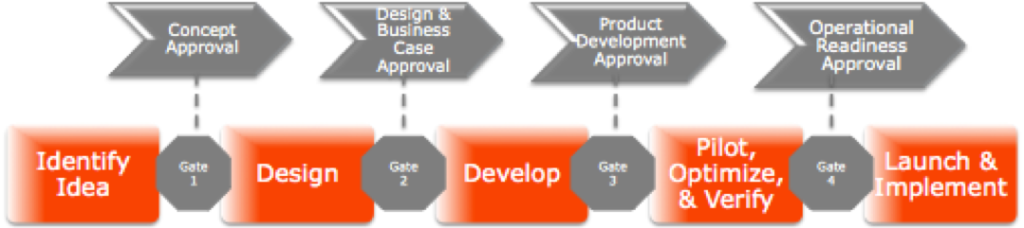

John Lupienski – In my opinion, John was, and still is, one of the most influential forces on what Motorola calls Lean and Six Sigma today. For example, John and I first documented the roadmap that is used by most providers of Black Belt training back in 1988. John recognized the need for the roadmap. Those who are claiming all of the credit for the roadmap and all of the buzzwords around it did not even have it right when it was sold to AlliedSignal and GE. This point is easy to prove by researching all of the “intellectual property” sold to these two companies. John has always known the next logical step to take in this journey and has driven it regardless of the opposition he confronts. He is also a great teacher who has been sharing his knowledge with everyone involved with ASQ in the Buffalo, N.Y. area for over a quarter of a century. John remained loyal to Motorola and Buffalo even though it is clear he could have advanced his career, his fortune, and his personal notoriety by following the path taken by many of us.

Marty Rayl – Marty is simply the best champion I have ever experienced. Most who have had to deal with Marty would tell you some very unpleasant stories. If you did not cooperate with Marty’s folks at Motorola in the late 80’s, Marty would provide you with one of the most unpleasant experiences you would ever hope to avoid in corporate America. His message? Cooperate with my people or you get to deal with me! The Automotive group of Motorola made outstanding improvements during Marty’s time there. Marty also taught me to have a “book budget” – to give away books to anyone who would obligate themselves to use them. I maintain the model to this day.

Steve Zinkgraf – Steve made the consulting model work. The intellectual property sold to AlliedSignal and GE was unusable and Steve created the backbone of all the training materials that resulted from those efforts while he was an employee of AlliedSignal. My team at AlliedSignal Automotive finished out the backbone Steve created. Most of you were trained using a derivation of AlliedSignal’s intellectual property. Steve taught me to teach DOE in simple useable language. Steve’s and my work with Minitab in the late 80’s through 1998 set the stage for much of the functionality that exists in Minitab that is specifically geared toward the Continuous Improvement community.

Richard Schroeder – the only person I know superior to John Lupienski in knowing what to do and equal to Marty Rayl when it comes to supporting his people. He is unrivaled at challenging the thinking of the C-suite. Rich has had unprecedented influence in corporate America with Galvin, Bossidy, and Welch at the top of the list of persons he has affected. His influence continues today. He alone set the course for GE and AlliedSignal long after both companies tired quickly of the Mikel Harry magic act. Rich is the reason they stayed the course and therefore the reason for an amazing run of profit improvement. You can see Rich’s impact if you look at the profits and stock price of AlliedSignal (1995 – 1999), GE (1996 – 2001), Kraft Foods (2010 – 2013), and dozens of other Fortune 500 companies. Remember Deming’s constancy of purpose? That is what Rich brought in those time periods.

Jack Welch – I do not worship at the feet of Jack Welch like many do, but it has to be noted that he created the largest culture of grasping change and driving it ever seen in the history of the business world. What was called Lean and Six Sigma at GE in the late 90’s is the culmination of twenty plus years of groundwork laid by Jack and his staff. Every leader in business should hope to have a fraction of the influence Jack has had. It also should be noted that GE had the weakest Six Sigma and Lean practitioners of all the companies implementing in the late 90’s. They succeeded on the strength of the GE culture and most were mediocre when they went elsewhere. Jack brought Constancy of Purpose to GE 1980 through his retirement. The Constancy went away within days of his retirement and you can also see that in their profits and stock price – both tanked.

Are there others? Absolutely. There are brave people through this whole thing that contributed tools and leadership. For example, I would read everything Ohno and Shingo wrote. They are not the ones claiming ownership or to be creators of all this. They are just people who did some great things at the right time, and all were open to sharing their knowledge. I thought you might want to know about some of them.

Great things can be done in a few years. Basically nothing can be done for those who seek change by end of the month or end of the quarter.

If you only read one book in your career, make it Deming’s Out of the Crisis.

— Gary

By Dewan Simon, GPS Consultant

By Dewan Simon, GPS Consultant

Production problem keep appearing and there isn’t information to fix it? Scott Widener offers a way to use what you already have in hand.

Production problem keep appearing and there isn’t information to fix it? Scott Widener offers a way to use what you already have in hand. Guest post by Rob Wardlow.

Guest post by Rob Wardlow.